Airflow has many contenders now but still continues to hold strong when it comes to orchestration and scheduling of tasks in the Data Engineering Field.

If you are running self hosted Airflow in your organization it helps to

understand what the system is made of so that when it breaks you can fix

it fast. Let’s look into its Architecture.

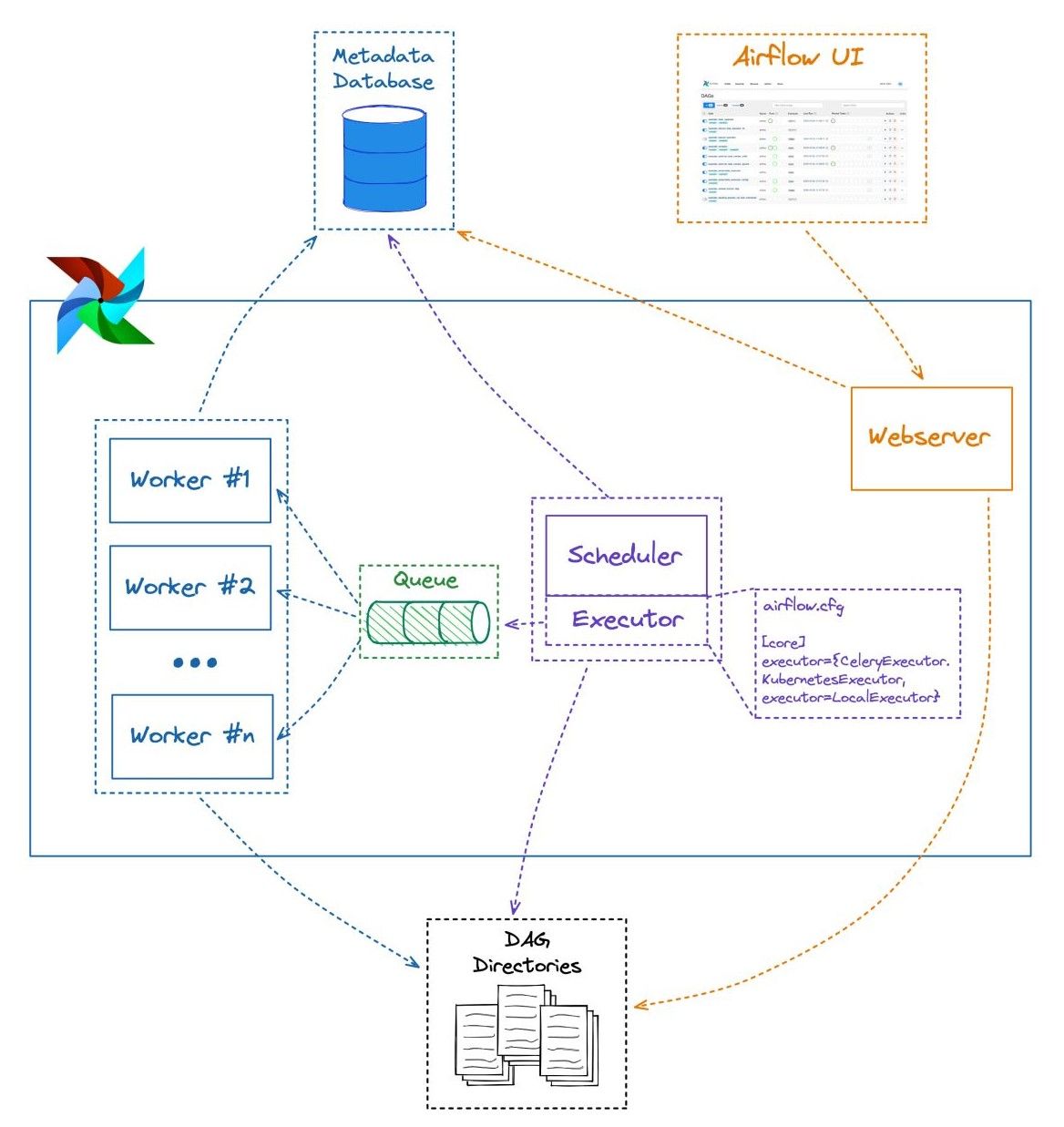

Airflow is composed of several microservices that work together to perform work. Here are the components:

𝗦𝗰𝗵𝗲𝗱𝘂𝗹𝗲𝗿.

➡️ Central piece of Airflow architecture.

➡️ Performs triggering of scheduled workflows.

➡️ Submits tasks to the executor.

𝗘𝘅𝗲𝗰𝘂𝘁𝗼𝗿.

➡️ Part of the scheduler process.

➡️ Handles task execution.

➡️ In production workloads pushes tasks to be performed to workers.

➡️ Can be configured to execute against different Systems (Celery, Kubernetes etc.)

𝗪𝗼𝗿𝗸𝗲𝗿.

➡️ The unit that actually performs work.

➡️ In production setups it usually takes work in the form of tasks from a queue placed between workers and the executor.

𝗠𝗲𝘁𝗮𝗱𝗮𝘁𝗮 𝗗𝗮𝘁𝗮𝗯𝗮𝘀𝗲.

➡️ Database used to store the state by Scheduler, Executor and Webserver.

𝗗𝗔𝗚 𝗱𝗶𝗿𝗲𝗰𝘁𝗼𝗿𝗶𝗲𝘀.

➡️ Airflow DAGs are defined in Python code.

➡️ This is where you store the DAG code and configure Airflow to look for DAGs.

𝗪𝗲𝗯𝘀𝗲𝗿𝘃𝗲𝗿.

➡️ This is a Flask Application that allows users to explore, debug and

partially manipulate Airflow DAGs, users and configuration.

❗️ Two most important parts are the Scheduler and the Metadata DB.

❗️ Even if Webserver is down - tasks will be executed as long as the Scheduler is healthy.

❗️ Metadata DB transaction locks can cause problems for other services.